It’s bracing to spend time with people who know in their hearts that your way of life is going the way of the horse and buggy.

In an earlier post, I described a few legal concepts in vogue at In Re Books, a conference about law and the future of the book that I attended on 26 and 27 October, and I characterized the conference as haunted by the ghost of the late Google Books settlement. In this post, I’d like to relay what the conferencegoers had to say about the future of publishing, including the problem of how to price e-books.

Most of the conferencegoers seemed to be lawyers, law professors, or librarians. One of the exceptions, the author James Gleick, noted that everyone present was united by the love of books—and then added that the love sometimes took the form of a wish to have the books for free. But the lawyers themselves didn’t seem to think of their doomsaying as in any way volitional. Some of them even seemed to look upon the publishing industry with pity; they hoped it would soon be out of its misery.

Consider, for example, the battle being waged between Amazon and traditional publishers over the price of e-books. Most people in publishing see their side as waging a crusade in the name of literature. Their version of the story goes like this: A few years ago, Amazon had managed to establish a near-monopoly on e-books by offering low prices. Amazon in many cases sold e-books to customers for even less than the wholesale price that publishers demanded, losing money for the sake of market share. Publishers were alarmed. If customers came to expect such low prices habitually, and if Amazon’s monopoly remained unbroken, publishers would be forced in time to lower their wholesale prices radically. Editors, designers, publicists, and sales representatives would lose their jobs, and books would no longer be made with the same level of care—if publishers managed to remain in business at all. When Apple debuted the Ipad in 2010, publishers saw a chance to rebel. They agreed with Apple to sell e-books on what was called the “agency model”: publishers were to set the retail price, and Apple was to take a percentage, the way it did with the apps sold through its Itunes store. With many titles, the publishers were agreeing to sell e-books to Apple for a wholesale price lower than the one they had been getting from Amazon, but the power to control retail price seemed worth the sacrifice. The publishers gave Amazon a choice: accept the agency model or lose access to books. Amazon complained that the publishers were abusing their “monopoly” over books under copyright, and the retailer briefly tried to coerce publishers by erasing the “buy” buttons from the Amazon pages of the publishers’ print titles. In the end, though, Amazon gave in, and over the next couple of years, Amazon’s market share in e-books fell. Today the Nook and the I-Pad offer the Kindle stiff competition. In April 2012, however, the Department of Justice accused the publishers and Apple of antitrust violations. A few publishers settled, for terms that required them to allow Amazon to discount their e-books as before. Others are still fighting the charges. Amazon, meanwhile, has become a publisher itself—of serious books, as well as vanity titles. How, most people in publishing want to know, can the Department of Justice fail to see that Amazon is trying to drive traditional publishers out of business?

The lawyers at the In Re Books conference were able to see that, as it happens. They just didn’t see it the way people in publishing do. They saw, rather, a historical process of Hegelian implacability, and they saw the publishers as desperate characters who had resorted to possibly illegal maneuvers in a futile attempt to prevent it. “You know, the agency model,” said Christopher Sagers, a law professor at Cleveland State University who specializes in antitrust, “we used to just call it price-fixing.” Sagers allowed that a recent Supreme Court ruling, the Leegin case of 2007, was somewhat indulgent toward so-called “vertical” price-fixing, which consists of a series of contracts between a manufacturer and its distributors and retailers, along the vertical axis of the supply chain, that allows a manufacturer to determine retail prices of its goods. (Apple famously prohibits its retailers from discounting its products without permission, for example.) But “horizontal” price-fixing remains illegal, as do certain strains of “vertical” price-fixing, Sagers said, and the Department of Justice thought that the publishers and Apple were guilty along both axes. It was no defense, Sagers pointed out, to say that the publishers were choosing to lose money. The law didn’t care about that. Nor was it a defense to say that publishing is special. Throughout history, Sagers said, companies have responded to antitrust accusations by claiming to be special, and Sagers didn’t think publishing was any more special than, say, the horse-and-buggy-making industry had been. In Sagers’s opinion, publishing is suffering through the advent of a technological change that is going to make distribution cheaper and, through price competition, bring savings to consumers. Creative destruction is in the house, and there is no choice but to trust the market. “Someone will figure it out,” said Sagers, “it” being a new economic engine for literature, and he apologized for sounding like Robert Bork by saying so. As for the charge that Amazon was headed for a monopoly, Sagers’s reply was, in essence, Well, maybe, but the answer isn’t to let a cartel set prices.

The legal question at issue is somewhat muddied by the fact that publishers are allowed to set the retail prices of books and even e-books in a number of other countries, where publishing is heralded as special. Germany, France, the Netherlands, Italy, and Spain allow the vertical price-fixing of books, as Nico van Eijk, of the University of Amsterdam, explained at the conference. The United Kingdom, Ireland, Sweden, and Finland, on the other hand, do not. Van Eijk thought he saw a pattern: The warm and emotional countries indulge their literary sector, while the cold and as it were remorseless ones subject it to the free market. The nations that allow for “resale price maintenance,” as it’s called, in publishing justify the legal exception for three reasons. They believe that it brings a bookstore to every village, that it makes possible a wide selection of books in those bookstores, and that it enables less-popular books to be subsidized by more-popular ones. In other words, the argument for resale price maintenance rests largely on the contribution that local, independent bookstores make to cultural life. And bookstores do thrive in countries where publishers may set retail prices. The trouble is that the same arguments don’t work as well with e-books, as Van Eijk pointed out. E-bookstores are virtually ubiquitous, thanks to widespread internet access, and every e-book available for sale is available in almost every e-bookstore. As for cross-subsidization, van Eijk dismissed it as already doubtful even as a justification for printed books. (In fact, though several people I spoke to at the conference seemed either unaware of it or not to believe in it, the current publishing system does allow for cross-subsidization. Most books of trade nonfiction wouldn’t get written without it. Publishers advance substantial sums to writers who propose books that sound promising, and publishers can afford the bets because they’re buying a diversified portfolio: if the biography of Henry VIII doesn’t make it big, maybe the cultural history of the Mona Lisa will. If publishers are driven out of business, only heirs and academics are likely to be able to put in the years of research necessary to write a book of history, unless the market comes up with a new funding mechanism.) Most European countries seem skeptical of allowing resale price maintenance for e-books, but “we’ll always have Paris,” van Eijk joked. French law, he explained, not only allows but requires fixed pricing for e-books. Moreover, France insists on extraterritoriality: even non-French booksellers must comply if they want to sell to French customers.

Niva Elkin-Koren, of the University of Haifa, predicted a “world of user-generated content,” where the tasks of editing and manufacturing books will be “unbundled,” and “gatekeeping,” which now occurs when Manhattan editors turn down manuscripts, will take place through online reviews after the fact. She seemed to see the “declining role of publishers,” as she put it, as a liberation, but I’m afraid I found her vision bleak. In the future, will we all be reading the slushpile? Jessica Litman, of the University of Michigan, also thought little of publishers, accusing them of angering libraries and gouging authors. As a bellwether, Litman pointed to the example of a genre author whom she likes who now sells her books online. I found myself wondering if Litman was extrapolating from an experience with academic and textbook publishers, some of whom do bully authors and have resorted to extorting the captive markets of university libraries and text-book-buying students. In my experience, trade publishers go to great pains to keep prices low and authors happy.

In the last panel session, a masterful analysis of the economics of publishing in America and Britain was presented by John Thompson, of the University of Cambridge, author of Merchants of Culture. Thompson began by surveying the forces of change in the last couple of decades. In the 1990s, the rise of bookstore chains killed off independent bookstores. The introduction of computerized stocking systems brought greater control over when and where books appeared in stores. Once upon a time, paperbacks were publishing’s bread and butter, but mass-marketing strategies originally devised for paperbacks were applied to hardcovers, and in time hardcovers became the moneymakers. Literary agents grew more powerful. A handful of corporate owners consolidated control.

Thanks to these changes, said Thompson, today there are large publishers and many tiny ones, but very few that are middling in size. That’s because a midsize publisher misses out on the economies of scale available to a large one, and misses out on the barter-circle of favors that indie presses are willing to exchange with one another. Large publishers are preoccupied with “big books,” which Thompson defined as “hoped-for best-sellers,” because their corporate owners demand annual growth of 8 to 10 percent, even though the overall market for books is stagnant. At a large publisher, the only way to keep your job is to pursue big books, however mathematically doomed the pursuit may be in the larger scheme of things. Big-book status depends, in Thompson’s formulation, not so much on fact as on “a web of collective belief”; big books are identified by the “expressed enthusiasm of trusted others.” Certain people—often, literary agents—become brokers in this economy of belief, enabling them to extract higher prices. Thompson called the result “extreme publishing.” Every year, the reasonable sales predictions aren’t good enough, and editors are forced to try to “close the gap,” that is, to come closer to the sales figures that their corporate overlords are demanding—a task for which only big books are big enough even to be plausible. Meanwhile, as bookstores are shuttered, it’s becoming harder and harder to bring new titles to customers’ attention. In hopes of making a big book, publishers pay to feature their books in store windows, where a new book has about six weeks to prove itself. If it shows signs of doing well, publishers have become adept at “pouring fuel on a flame,” as Thompson put it. But they’ve also become ruthless at killing off the weak. About 30 percent of books are returned from bookstores to publishers, and most are pulped.

In the United States, said Thompson, publishers face agents who are able to demand higher advances for their authors. In the United Kingdom, where the Net Book Agreement, which allowed publishers to set retail prices, collapsed in the 1990s, publishers face powerful retailers like Tesco who not only sell at a discount but demand cuts on wholesale prices.

As for e-books, Thompson stressed that the market is changing fast enough to make a fool out of anyone claiming to know what it will do next. He noted that when e-books were introduced, most analysts expected business titles to be the pioneers, but instead genre fiction led the way. Forty to fifty percent of romances, science fiction novels, and thrillers are now sold in digital form. (I thought I saw a hint of an explanation for the divergence in a talk given by Stuart M. Shieber, a professor of computational linguistics at Harvard. After analyzing the pros and cons of print books and e-books—including such factors as resolution, weight per reading unit, capacity for random access, and pride of ownership—Shieber predicted that when display technology has been perfected, “E-book readers will be preferable to books” but “Books will still be preferable to e-books.” If Shieber is right, then perhaps what differentiates is where a reader’s attachment lies. If your attachment is to the experience of reading rather than to a particular set of titles, you’re more likely to prefer an e-reader. But if your attachment is to particular books, you’ll prefer to read them in print. After all, at the extreme, if all you want to do is re-read a single text, you probably won’t bother with an electronic device.) But even literary fiction is shifting, Thompson noted. Twenty-five percent of the sales of Jonathan Franzen’s Freedom were e-books, and fifty percent of Jeffrey Eugenides’s The Marriage Plot.

Though he stressed the hazards of guessing, Thompson concluded by making a number of short-term predictions. He thought Amazon would continue to grow and bookstore chains to wither. He foresaw more consolidation, as weak publishers fold and impatient corporate owners decide to get out of the publishing business. As bookstores vanish, they will be taking their windows and display tables with them, and it will become harder and harder to introduce new books to readers, a battle that will have to fought online. Thompson expected that different kinds of books will continue to shift from print to digital formats at different speeds. Price deflation for e-books will be perhaps publishers’ greatest challenge, and publishers will very likely be forced to reduce costs in order to remain profitable—shedding staff and limiting themselves even more rigidly to big books than they do now. Nipping at their heels, all the while, will be an army of small presses and start-ups, many of whom will be trying to come up with new kinds of “disintermediation”—new ways to abridge a book’s journey from writer to reader.

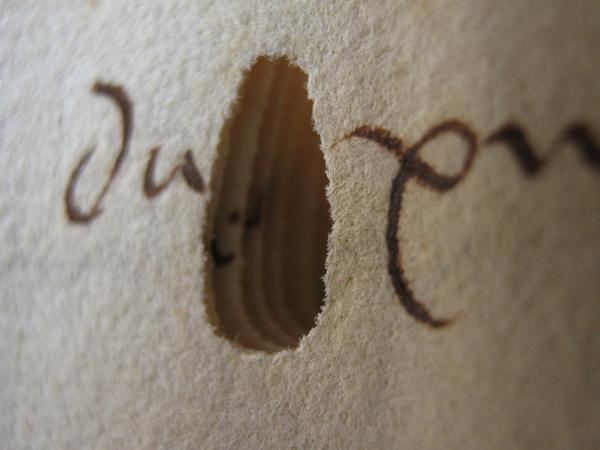

What does it all mean? In looking over these notes, I find myself wondering if copyright is meaningful in the digital world without some power to set retail prices. The rigorous application of free-market logic to issues of copyright sounds slightly off-key to me. It is nowhere written that the law has to defer to macroeconomics, which copyright, by its very nature, defies. No market left to its own devices would come up with copyright. The whole point of it is that society has decided that the written word is special, and has recognized that perfect competition in the literary sphere quickly leads to prices so low that no writer can make a living. (An important subsidiary point is that society demands, in exchange for granting this exceptional economic protection, a temporal limit to a copyright’s term, but we’re not litigating that aspect of the case today.) Amazon’s publicists had a point when they lamented that copyright is a monopoly. In the market for a particular work of literature, it is one, a legal one. It is authorization to sell a work of literature at a higher per-unit price than the market would support if everyone were free to print it. Authorization alone would be meaningless, however. The government also has to prevent a publisher’s competitors from selling the same work at a lower price. In her remarks at the conference, Elkin-Koren predicted that as books turn into e-books, they will move from being commodities to being services, and publishing will merge with retailing. “There is no difference between a bookseller, a publisher, and a library,” she said. But if she’s right, then if copyright is to have any force, shouldn’t the power to set a book’s price at its “first sale” be extended to the price of the license sold to the reader-consumer? The extension might be necessary to preserve the spirit of copyright. And given the ease with which digital copies can be made and shared, it might also be necessary to retain beyond the “first sale” of an e-book the copyright controls that are exhausted upon the first sale of a printed book. That may sound inelegant, but there’s no reason to think that the best way for law to foster literature is going to be natural-looking. Copyright never has been natural, and it never will be. The challenge is to find the least amount of legal protection adequate to retaining publishing as a viable business.